}

}

Ink to Code: Technical SEO Considerations for News Publishers

Published 2024-03-04

In the second in this series of SEO articles specifically for news websites and publishers, Emina Demiri-Watson is back to deep-dive (yeah, we said it) into technical SEO.

One of the elements every SEO needs to grapple with, whether they are happy about it or not, is technical. And technical SEO for news publishers comes with its own set of distinct challenges and approaches.

Considering the challenging SEO environment publishers operate in, it’s not surprising that SEOs working in the field need to think inside and outside of the box to get results. Challenges such as legacy technology, commercialization that often relies on heavy use of JavaScript, and heavy websites with a ton of content need to be understood by publisher SEOs (using the right tools).

Then there is the unique set of criteria we suspect Google employs when crawling, indexing and ranking news sites. All of this will impact the technical SEO-set up!

Contents:

News website technical SEO setup

The ‘need for speed’ that is unique for publishers means that some of the general pitfalls of SEO are even more pressing for news outlets. One of the most important ones is ensuring your website is optimized for speed and content freshness.

While Google doesn't specifically outline the differences between the technical setup of crawling, indexing and ranking for publishers, it is obvious that there are some differences.

In normal circumstances, Google has time to do all the processes and checks that are needed. However, when it comes to news, the process just takes too long! Articles are relevant at the moment in time that they’re published, they come out fast, and Google can’t keep up. This is because of the process Google goes through to crawl, render, and index a page in full (more on this below).

From there, it is not a big logical leap to conclude that to rank your news in search in general and in Google's “News surfaces” in particular, you need to ensure that your technical set-up facilitates that ‘need for speed’.

Extra tip:

As a publisher, you also need to have a profile on the Google Publisher Centre. Publisher Center is an interface that helps publishers submit and manage their content in Google News. While you no longer need to apply for Google News as such, having the Publishers Centre is helpful for ranking in News and Discover (as is a news sitemap – more on that later!). It also provides your readers with the opportunity to follow your publications on Google News, which builds publication loyalty and readership.

Minimum technical SEO considerations for news publishers

Technical is one of the more complex SEO fields and it can be daunting, particularly for SEOs just starting. The bad news is that it doesn't get any easier with news publishers! But before you get lost down the rabbit hole of technical SEO, aim to get the basics right.

Here are 6 minimum technical SEO considerations every publisher should pay attention to:

1. Ensure JavaScript is used correctly

Even under normal circumstances, having a JavaScript-heavy website can be a pain. When it comes to publishers, it can be a death sentence. Google crawling and indexing usually happens in two stages:

- Stage 1: Googlebot crawls the page and indexes based on the HTML source code

With news, and because of the need for speed, this second stage often doesn’t even happen – at least not in time anyway. If article headlines and copy are being updated by JavaScript, the story might be changing more quickly than Google is able to render the pages to ‘see’ those changes.

This is likely why Google advises publishers to keep their content in pure HTML.

Source: Google News Technical guidelines

This problem is compounded for publishers by advertising monetization. A lot of ads are loaded using heavy JavaScript. This is why it’s important to ensure your ad assets load AFTER your main content.

Learn more about the JavaScript “gap” in our panel discussion with renowned SEO Consultants, Aleyda Solis and Arnout Hellemans, and Sam Torres of Gray Dot Company. Watch the free webinar

2. Implement structured data

Adding structured data for SEO to any website is a no-brainer. But for news publishers, it is a must!

Going back to the need for speed, one way for Google to quickly figure out what's going on is to read the structured data markup publishers add to their news articles. At the very minimum, this should include NewsArticle Schema markup.

Some other interesting and more specific options here are also:

- OpinionNewsArticle

- AnalysisNewsArticle

- AskPublicNewsArticle

- ReviewNewsArticle

- Author (Schema property) used for any Schema type that falls under the CreativeWork or Review classification eg. NewsArticle.

- Events Schema - particularly relevant for publishers who also run events!

- Video Schema

You can add your Schema markup directly into the code or you can use a plugin or a Schema markup generator and Google Tag Manager (GTM). For example, we suggest Schema Pro as an option for publishers on WordPress websites. There are a ton of Schema generators out there, the one we use is the Merkle Schema Generator.

For example, our client Retail Bulletin runs events and, for them, event Schema is a must. Since there aren’t many events, we opted for implementation using the generator and GTM.

The process:

Step 1. Use the generator to generate your JSON-LD markup

Step 2: Create a new Custom HTML Tag in Google Tag Manager

Step 3: Paste JSON-LD markup into the Custom HTML text box

Step 4: Save your tag

Step 5: Create a new page view trigger and adjust it if you don't want it firing on all pages

Step 6: Connect your new trigger to your Custom HTML tag

Step 7: Test all is firing using Preview

Step 8: Publish the new container version

Step 9: Test your markup using Rich Results Test and/or Schema Markup Validator

3. Create a news sitemap

Another tip related to the need for speed is the News XML sitemap. Your news sitemap needs to show ONLYFRESH articles that have been published in the last 48h.

For larger publishers, more than 1,000 <news:news> tags in a news sitemap, it is recommended that you split your sitemap.

One of the most important elements in your news sitemap is your title reference <news:title> . Try and make your titles user-friendly and optimized for clicks (more on that later in the on-page section).

Once you have your news sitemap created don't forget to submit it via Google Search Console. This will also help with debugging if there are any issues with the technical set up.

Extra tip:

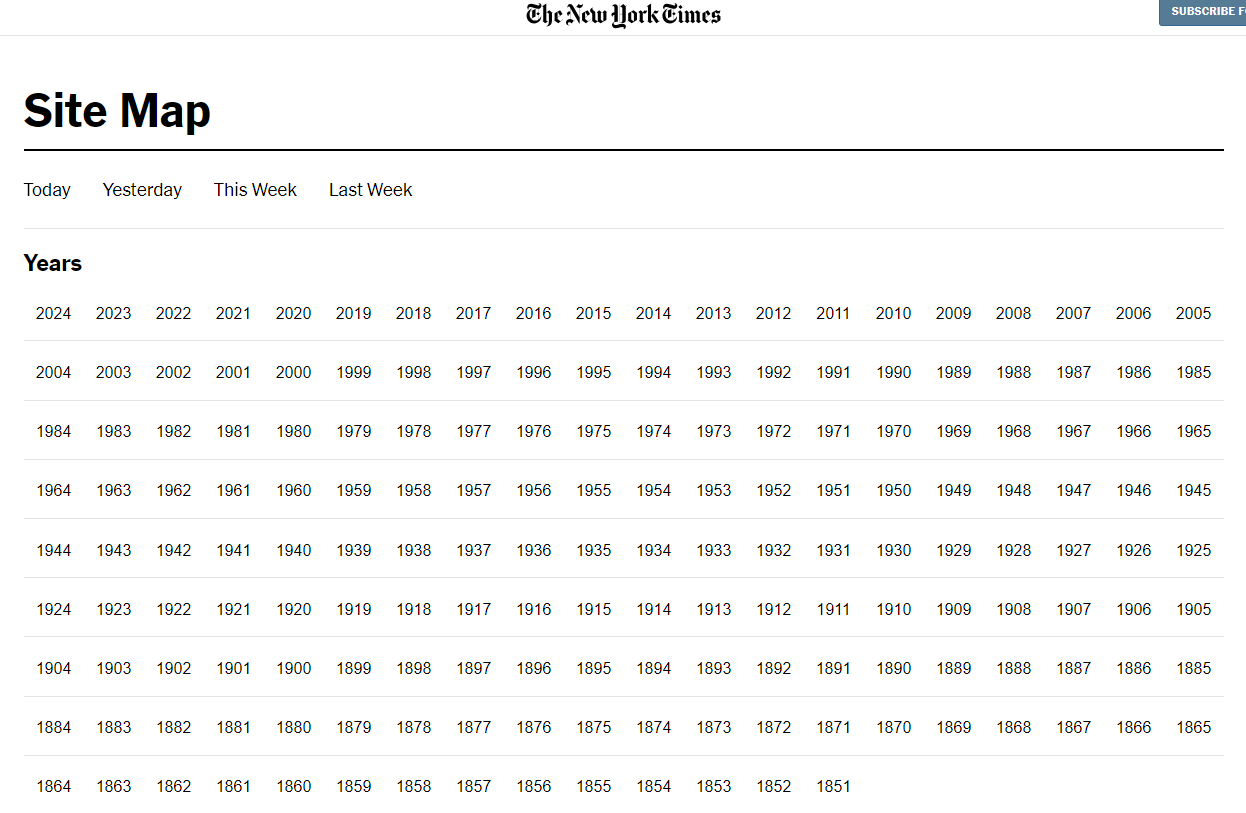

The other type of sitemap publishers should consider is an HTML sitemap. While this is intended more for users and cannot be submitted via Google Search Console, let’s remember the need for speed! Having an HTML sitemap on your website ensures the most recent articles are never more than "3 clicks" away from any page on the site. This in turn improves crawling. We picked up this idea from the amazing SEOs at the New York Times and implemented it for our own news client.

4. Review website architecture and URLs

Most news websites are huge! And only getting bigger by the minute. This requires publisher SEOs to think about website taxonomy.

Categories and topic segments

As a news publisher, making sure your categories are indexed and not disallowed in your robots.txt file is a smart move. A lot of news publishers segment their articles based on topics, eg. politics, fashion…. If those categories are not indexed you are missing out on internal linking opportunities and potential rankings.

Pagination

Another thing to consider is how you handle pagination. There are three ways to do this (for any website, not just publishers):

- Traditional pagination: the user needs to click on Numbers or Next/Previous to load more news

- Load more buttons: the user can click to extend an initial set of displayed news

- Infinite scroll: the user doesn't need to do anything but scroll and the news articles are loaded automatically

While there is no set rule for the best pagination, one thing to consider is that Googlebot does not click on anything or scroll a page. It follows links to get to places. This of course means that old articles that are not loaded in the first view may be orphaned fairly quickly if not linked from other places, eg. an HTML sitemap or using other internal linking strategies.

URL structure

One area that is particularly relevant for publishers is the URL. Due to the need for speed in news, Googlebot may pay more attention to the URL structure. By this, we don’t mean stuff your URLs with keywords! That won't give you results. But thinking about clearly outlining in the URL what the page is about is important.

One of the pitfalls we’ve seen on smaller publishers in particular is reusing the same URL structure (based on a similar or the same headline) for repeating news.

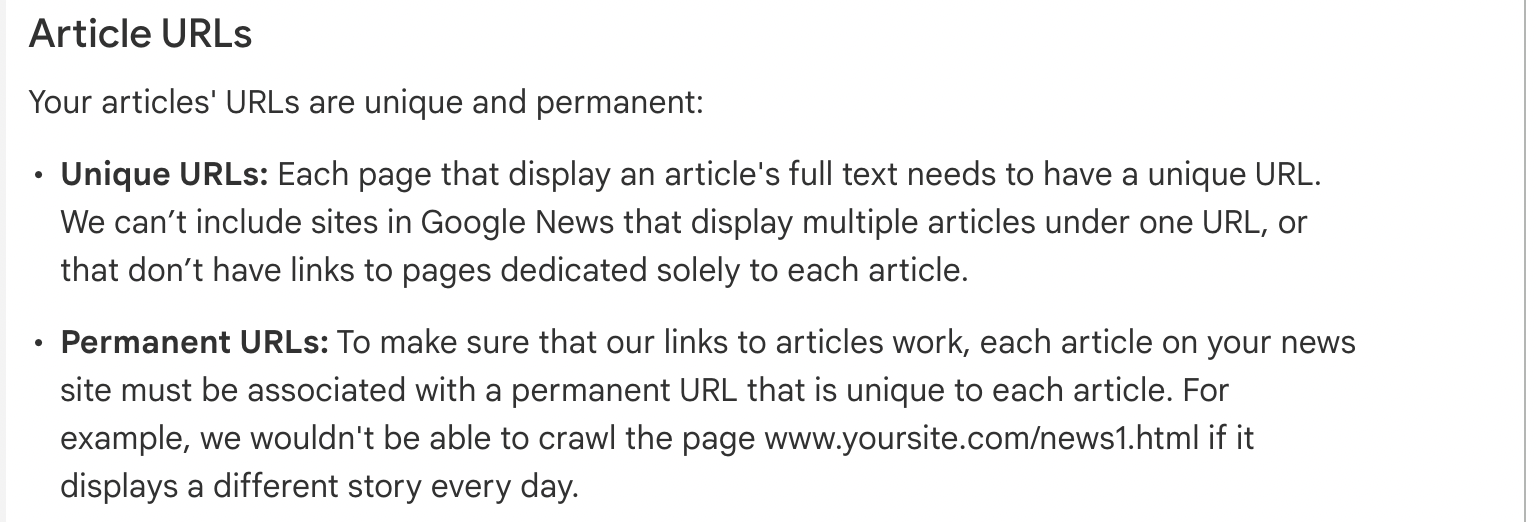

Google clearly states that article URLs must be unique and permanent:

Source: Google News Technical guidelines

Another URL strategy employed by top publishers ranking in Top Stories is ensuring that trending topics are continuously reported on using a fresh URL even for ongoing coverage. Google demands freshness for news, so the rationale is quite obvious here: a new URL each day ensures the content is always updated and eligible to appear in Top Stories.

Chris Lang, a brilliant SEO from Go Fish Digital shared an excellent video around this topic. He discovered this strategy being used by top publishers such as CNN, The New York Times and CNBC.

5. Optimize RSS and news feeds

Another technical element that is worth paying special attention to for SEOs working in publishing is the RSS feed.

Google Publishers Centre – the interface that allows you to publish and manage your news – works based on RSS feeds. This is, again, not surprising considering the sector’s ‘need for speed’.

Like anything to do with SEO, RSS feeds are complex to understand and to get right, but here are three general tips and practices to get you started:

Speed up the RSS content frequency

By default, your RSS feeds will be updated every hour and within the Publisher Centre you will be able to see a timestamp of when this has occurred. But in the fast paced environment of news, an hour can be a looooong time! This is why you should consider speeding up the frequency of when content is read.

You can do this by implementing WebSubs. WebSubs is an open protocol that manages the flow between subscribers and publishers in real-time. In simple terms, it ensures that the feed is updated when changes are made. So a newly published news piece will be pushed into the feed instantly.

You can implement this directly or, most likely, you will use a plugin to get this onto your website (example of a Wordpress plugin).

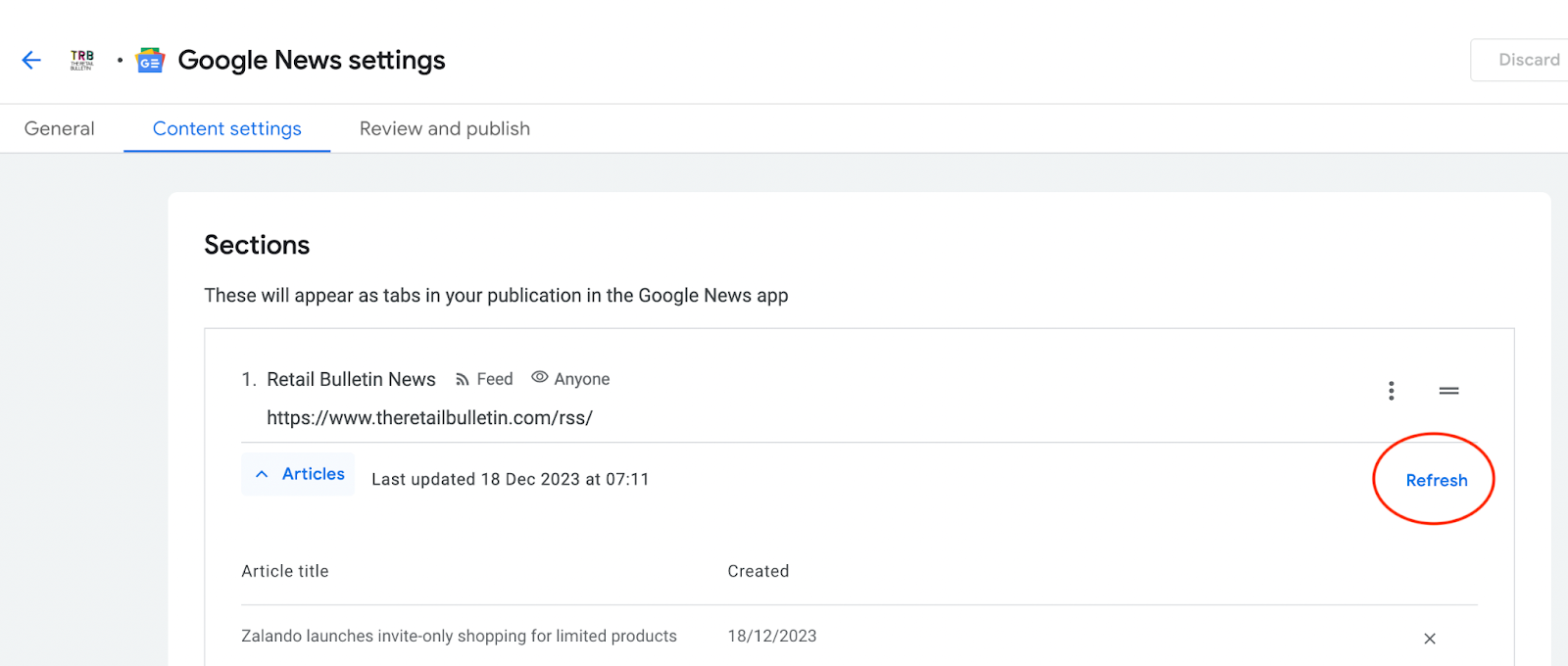

Alternatively, you can also refresh your feeds manually from the Publisher Centre. Open up your Publisher, access the Google News property you set up, choose Edit and go to Content Settings.

Test your feeds

You will want to ensure that your feed is running as it should. For example, is it updating, how is it updating (the frequency), does it have all the correct properties…

There are several tools out there to test your RSS feed:

- Test your feed using a feed validation service

- Check your feed within Publisher - in the Content Settings

- Manually review your feed

Break up feeds based on news category

Most publishers will have categories or topics of news on their website. For example, if you are a retail publisher, chances are you have categories broken down into fashion, department stores, food….

For user experience in Google News, and in general, for the organization of your feed, you should consider breaking up your feed into topics. For example, see New York Times’ business news RSS feed. This will also allow you to create topic-specific sections in your Google News through Google Publishers. All you need to do is to hook up your topic-specific feed in the Publishers Content Settings.

6. Manage crawling budget

Managing crawl budget is slightly overemphasized as a strategy by SEOs. It’s mentioned often and rarely is it actually a problem. Crawl budget is the number of pages search engines will crawl on a website within a certain timeframe. In reality, most websites will not have crawling budget issues.

As Google notes, managing crawling budget is for:

- Large sites (1 million+ unique pages) with content that changes moderately often (once a week)

- Medium or larger sites (10,000+ unique pages) with very rapidly changing content (daily)

- Sites with a large portion of their total URLs classified by Search Console as Discovered - currently not indexed

Many publishers will, unfortunately, fall into one of these categories. So yes, for news publishers, keeping an eye on crawl budget is important.

There are several ways to ensure your crawl budget is managed. Two we usually recommend are:

Review and action against the Crawl Report in GSC

Hidden in the Settings tab of your GSC is your Crawl Stats Report. This is where it should all start! Get familiar with it. Some things to keep an eye on here are:

- Average Response Time: check if it is high. Higher response time means that fewer pages will be crawled on each visit. To mitigate this:

- ensure your hosting capability is in line with the size of your news outlet (you will need to account for scale here!)

- implement server-side caching, and

- use CDNs.

- Response Codes: check for any 4XX and 5XX codes and action them. There is no need for crawling to be wasted on those!

- File Type: are JavaScript files the star of the show here? They shouldn't be. Go back and read the JavaScript section of this article.

Manage your crawl budget using robot.txt

Although, it should be noted that this isn’t recommended unless you know what you’re doing! In some cases, it might be worth stopping crawling completely for certain pages, for example disallowing internal search pages.

But do not use your robot.txt as a means to temporarily reallocate your crawl budget to other pages of your website. Only use it to block pages or resources that you don't want Google to crawl at all. Google won't shift this newly available crawl budget to other pages unless Google is already hitting your site's serving limit. Google has a great guide on managing crawling that has a robot.txt section. Be sure to read it.

An interesting debate has been raging recently in the publishing sector around blocking GPTBot and Google Bard (now Gemini) crawling using robot.txt as a means to prevent LLMs from learning and using the news to generate zero-click search results. This is something every publisher should keep an eye out for as it may have a direct impact on traffic and as a result revenue.

TL;DR summary

Technical SEO has never been the easiest of tasks and news publisher SEO is no exception. While the field is vast, SEOs working in the industry should at least cover the basics for a search engine-friendly news outlet technical setup.

It’s all about the ‘need for speed’ and your technique should consider this. Ensuring the website can be crawled by implementing JavaScript correctly can make a huge difference in a situation where Google’s JS render might not happen fast enough.

Structured data markup can not only help crawling but it can support your news outlet feature in important news surfaces. So will RSS feeds and a dedicated news sitemap.

Remember the constraints of your website as well. The frequent production of content means you need to pay attention to both your website architecture and your crawl budget. Pay attention to elements such as pagination that can choke your internal linking and remember your URLs.

Finally, before you dig into robot.txt, don’t forget to check the basics when managing crawl budget: the data available in your GSC Crawl Report.

Stay tuned for the next installment from Emina, which will go into the specifics of on-page SEO for news websites.

You might also like:

Emina is the Head of Digital Marketing at Vixen Digital, a Brighton UK-based digital marketing agency. She has over 10 years of experience in SEO and digital marketing. Her special connection to publishers also comes from her BA in Journalism. Emina’s marketing passions include technical/on-page SEO, analytics, channel alignment and automation.